Configure Lineage¶

Alation Cloud Service Applies to Alation Cloud Service instances of Alation

Customer Managed Applies to customer-managed instances of Alation

The OCF connector for Azure Power BI automatically calculates lineage information during metadata extraction. The lineage information you get in Alation depends on the Alation version and the connector version:

Lineage for Power BI dataflow objects is supported from Alation version 2023.3.3

Column-level lineage is supported from Alation version 2024.1.2 and connector version 2.2.3

Note

Column-level lineage is enabled by default from Alation version 2024.1.5. On older versions, a Server Admin enables it in Admin Settings > Feature Configuration. Learn more in Enable Column-Level Lineage below.

By default, the connector generates report-level lineage (dataset > report > dashboard). You have the ability to perform additional configurations to get more lineage information on lineage charts:

Configure Cross-System Lineage¶

You have the ability to configure cross-system lineage to generate lineage between your Power BI Scanner BI source and an RDBMS data sources supported by the connector for lineage:

Amazon Redshift

Azure Databricks

Azure SQL DB

Azure Synapse Analytics

Databricks on AWS

Databricks Unity Catalog

Google BigQuery

MySQL

Oracle

PostgreSQL

Snowflake

SQL Server

Teradata

Note

RDBMS data sources must be cataloged in Alation and be connected with the corresponding OCF connector.

Cross-system lineage is configured in the settings of an RDBMS data source. To generate cross-system lineage, configure the BI Connection Info field (Additional datasource connections field) on the RDBMS connector’s General Settings tab in the format host:port (for example, adb-8443049157651279.19.azuredatabricks.net:443). Find more information in Configure Cross-Source Lineage.

The screenshot below shows cross-system lineage configuration on the General Settings tab of a supported RDBMS data source:

Column-Level Lineage¶

From Alation version 2024.1.2 and connector version 2.2.3, you have the ability to enable lineage calculation for columns:

With column-level lineage enabled, Alation will generate lineage information for Power BI reports on the report field level.

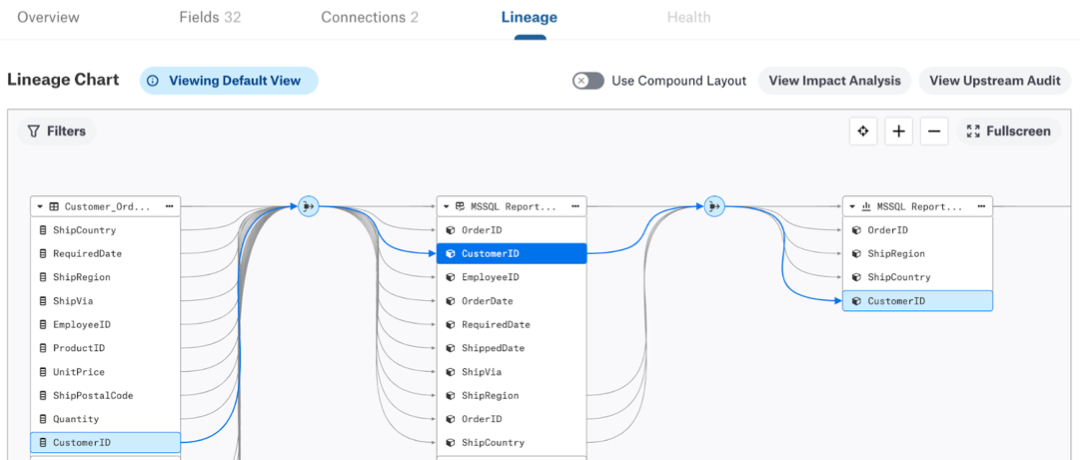

With both column-level lineage and cross-system lineage configured, users will be able to trace lineage from a specific RDBMS column to a specific report field in Power BI:

Enable Column-Level Lineage¶

Applies from connector version 2.2.3

To enable column-level lineage:

Starting with version 2024.1.5, column-level lineage is enabled by default. The corresponding feature configuration flag has been removed from Admin Settings > Feature Configuration.

To enable column-level lineage generation, Enable report field extraction.

Log in to Alation as a Server Admin.

Click the three gears icon on top right to open the Admin Settings page.

Under the Server Admin section, click Feature Configuration.

Locate the toggle Automatically extracts Column Level Lineage from Power BI Scanner data source. Click the toggle to activate the feature.

Click Save changes on the top right of the page to apply the change.

Enable Report Field Extraction¶

To enable report field extraction:

Go to the General Settings page of your BI source.

Enable the Enable Report Fields Extraction flag and click Save.

Click Run Extraction Now to extract metadata.

Lineage for your Power BI Scanner OCF source will be generated at the column level.

Note

Enabling the report field extraction can increase the extraction time, as it is dependent on the PBIX report export and parsing, which might take more time depending on file size.

Note

To download the PBIX report file for report columns extraction in column-level lineage, you must provide the service principal either Admin, Contributor, or Member access to the workspace in the Connector Settings section of General Settings tab of the PowerBI OCF connector. For information on how to add a service principal to a workspace, refer to Give service principal access to workspace in the Azure PowerBI documentation.

Additionally, you must also enable the Tenant Setting to download reports. To do so:

Note

For information on using the Lineage Settings tab for configuration, contact Alation Support.

Expressions Supported by Lineage¶

Lineage between data source tables and Power BI datasets is built through parsing dataset expressions which are Power BI M Queries (Power Queries). Alation gets these expressions as responses from the getScanResult API. Lineage between tables and datasets will only be generated if expressions are in one of the formats shown below.

Database Name |

Connection Type |

Sample Expression |

|---|---|---|

Azure Databricks |

Table |

"let\n Source = Databricks.Contents(\"adb-8651250466505288.8.azuredatabricks.net\", \"sql/protocolv1/o/8651250466505288/

0622-214404-awash362\", [Database=null, BatchSize=null]),\n SPARK_Database = Source{[Name=\"SPARK\",Kind=\"Database\"]}

[Data],\n default_Schema = SPARK_Database{[Name=\"default\",Kind=\"Schema\"]}[Data],\n diamonds_Table =

default_Schema{[Name=\"diamonds\",Kind=\"Table\"]}[Data]\nin\n diamonds_Table";

|

AWS Databricks |

Table |

“let\n Source = Databricks.Catalogs(\"dbc-25e69bfd-44ed.cloud.databricks.com\", \"sql/protocolv1/o/7841352139603430/

0118-050057-4xra3flu\", [Database=null, BatchSize=null]),\n hive_metastore_Database = Source{[Name=\"hive_metastore\",

Kind=\"Database\"]}[Data],\n technology_Schema = hive_metastore_Database{[Name=\"default\",Kind=\"Schema\"]}[Data],

\n strm_dems_Table = technology_Schema{[Name=\"student2\",Kind=\"Table\"]}[Data]\n in\n

strm_dems_Table\n”;

“let\n Source = DatabricksMultiCloud.Catalogs(\"dbc-25e69bfd-44ed.cloud.databricks.com\", \"sql/protocolv1/o/

7841352139603430/0210-085930-oexhpgse\", [Catalog=null, Database=null, EnableAutomaticProxyDiscovery=null]),\n

hive_metastore_Database = Source{[Name=\"hive_metastore\",Kind=\"Database\"]}[Data],\n default_Schema = hive_metastore_

Database{[Name=\"default\",Kind=\"Schema\"]}[Data],\n student2_Table = default_Schema{[Name=\"student2\",Kind=\"Table\"]}

[Data]\n in\n student2_Table\n”;

|

Unity Databricks |

Table |

“let\n Source = Databricks.Catalogs(\"adb-8443049157651279.19.azuredatabricks.net\", \"sql/protocolv1/o/

900788168547414/1031-122656-8zrkv0jk\", [Catalog=\"default\", Database=null, EnableAutomaticProxyDiscovery=null]),\n

hive_metastore_Database = Source{[Name=\"hive_metastore\",Kind=\"Database\"]}[Data],\n default_Schema = hive_metastore_

Database{[Name=\"default\",Kind=\"Schema\"]}[Data],\n t1_Table = default_Schema{[Name=\"t1\",Kind=\"Table\"]}

[Data]\nin\n t1_Table”;

|

SQL Server |

Table |

"let\n Source = Sql.Databases(\"ifac-sqlsrv.ceeyrlqdpprr.us-west-2.rds.amazonaws.com\"),\n Sales = Source{[Name=\"Sales\

"]}[Data],\n dbo_Customer_Orders = Sales{[Schema=\"dbo\",Item=\"Customer_Orders\"]}[Data]\nin\n dbo_Customer_Orders";

"let\n Source = Sql.Databases(\"tcp:ifac-sqlsrv.ceeyrlqdpprr.us-west-2.rds.amazonaws.com,1433\"),\n AdventureWorks =

Source{[Name=\"AdventureWorks\"]}[Data],\n Sales_vPersonDemographics =

AdventureWorks{[Schema=\"Sales\",Item=\"vPersonDemographics\"]}[Data]\nin\n Sales_vPersonDemographics";

"let\n Source = Sql.Databases("tcp:sqlwrhlondonprod.b05aaf70da1f.database.windows.net"),\n LONDON = Source{[Name="london"]}

[Data],\n london_pbi_SHIFTREPORT_REACHSTAT_Allinone = LONDON{[Schema="london",Item="pbi_SHIFTREPORT_REACHSTAT_Allinone"]}

[Data],\n #"Filtered Rows" = Table.SelectRows(london_pbi_SHIFTREPORT_REACHSTAT_Allinone, each [P_sysdate] >= RangeStart and

[P_sysdate] <= RangeEnd)\nin\n #"Filtered Rows""

"let\n Source = Sql.Databases(\"10.41.81.166:1433\"),\n AdventureWorks = Source{[Name=\"AdventureWorks\"]}[Data],\n

#\"Filtered Rows\" = Table.SelectRows(Sales_vIndividualCustomer, each Text.StartsWith([Schema], \"Adv\")),\n

Sales_vIndividualCustomer = AdventureWorks{[Schema=\"Sales\",Item=\"vIndividualCustomer\"]}[Data]\n

\nin\n Sales_vIndividualCustomer" **(Supported from version 2.5.0)**

|

Query |

"let\n Source = Sql.Database(\"ifac-sqlsrv.ceeyrlqdpprr.us-west-2.rds.amazonaws.com\", \"pubs\", [Query=\"select * from

jobs\"])\nin\n Source";

"let\n Source = Sql.Database(\"synapse-edw-d.sql.azuresynapse.net\", \"edwsyndsql\", [Query=\"SeLeCt#(lf) s.f_name,#(lf)

t.f_name#(lf)from #(lf) tmp.src_test s#(lf)inner JOIN #(lf) tmp.tgt_test t#(lf)ON #(lf) s.f_name = t.f_name\"])\nin

\n Source";

"let\n Source = Sql.Database(\"synapse-edw-d.sql.azuresynapse.net\", \"edwsyndsql\", [Query=\"select#(lf) s.f_name,#(lf)

t.f_name#(lf)from #(lf) tmp.src_test s#(lf)inner JOIN #(lf) tmp.tgt_test t#(lf)ON #(lf) s.f_name = t.f_name\"])\nin

\n Source";

"let\n Source = Sql.Database(\"10.13.12.200:1433\", \"test_database\", [Query=\"select * from

\"\"test_profilling_main.arcs.test\"\".\"\"arcstable\"\"\"])\nin\n Source";

|

|

MySQL |

Table |

"let\n Source = MySQL.Database(\"ifac-mysql.ceeyrlqdpprr.us-west-2.rds.amazonaws.com:3306\", \"employees\",

[ReturnSingleDatabase=true]),\n employees_departments = Source{[Schema=\"employees\",Item=\"departments\"]}[Data]\nin\n

employees_departments";

|

Query |

"let\n Source = MySQL.Database(\"ifac-mysql.ceeyrlqdpprr.us-west-2.rds.amazonaws.com:3306\", \"crm\", [ReturnSingleDatabase

=true, Query=\"select c.customerNumber , c.customername, c.city, c.country from customers c , orders o where

c.customernumber=o.customernumber\"])\nin\n Source";

|

|

Azure SQL |

Table |

"let\n Sql.Database(\"tf-testal-94619nimeshkuma-17.database.windows.net\", \"SqlServerAzDB_1\"),\n

schemaWithViews01_testView01 = Source{[Schema=\"schemaWithViews01\",Item=\"testView01\"]}[Data]\nin\n

schemaWithViews01_testView01";

|

Azure Synapse |

Table |

"let\n Source = Sql.Database(\"synaptestal125371ayush24-ondemand.sql.azuresynapse.net\", \"master\"),\n

dbo_MSreplication_options = Source{[Schema=\"dbo\",Item=\"MSreplication_options\"]}[Data]\nin\n

dbo_MSreplication_options";

|

Oracle |

Table |

"let\n Source = Oracle.Database(\"ifac-orcl.ceeyrlqdpprr.us-west-2.rds.amazonaws.com:1521/orcl\", [HierarchicalNavigation=

true]),\n IFAC_ADMIN = Source{[Schema=\"IFAC_ADMIN\"]}[Data],\n ORDER_ITEMS1 = IFAC_ADMIN{[Name=\"ORDER_ITEMS\"]}[Data]\

nin\n ORDER_ITEMS1";

|

Postgres |

Table |

"let\n Source = PostgreSQL.Database(\"ifac-pgsql.ceeyrlqdpprr.us-west-2.rds.amazonaws.com:5432\", \"postgres\"),\n

public_events = Source{[Schema=\"public\",Item=\"events\"]}[Data]\nin\n public_events";

|

Amazon Redshift |

Table |

"let\n Source = AmazonRedshift.Database(\"redshift-cluster-1.csjsqfswsudr.us-east-1.redshift.amazonaws.com:5439\", \"dev\",

[BatchSize=null]),\n public = Source{[Name=\"public\"]}[Data],\n category1 = public{[Name=\"category\"]}[Data]\nin\n

category1";

|

Query |

"let\n Source = Value.NativeQuery(AmazonRedshift.Database(\"warehouse.data-platform.safetyio.com\",\"production\"), \n

\n \"\n select distinct \n \n document_type\n\n from prod_object_layer.ol__ar_aging_all_customer_line_items

\n\n where days_past_due_bucket is not null\n\n\", null, [EnableFolding=true]),\n

#\"Sorted Rows\" = Table.Sort(Source,{{\"document_type\", Order.Ascending}})\nin\n #\"Sorted Rows\""

|

|

Snowflake |

Table |

"let\n Source = Snowflake.Databases(\"alation_partner.us-east-1.snowflakecomputing.com\", \"LOAD_WH\", [Role=null,

CreateNavigationProperties=null, ConnectionTimeout=null, CommandTimeout=null]),\n TABSFDC_Database =

Source{[Name=\"TABSFDC\",Kind=\"Database\"]}[Data],\n PUBLIC_Schema = TABSFDC_Database{[Name=\"PUBLIC\",Kind=\"Schema\"]}

[Data],\n ACCOUNT_Table = PUBLIC_Schema{[Name=\"ACCOUNT\",Kind=\"Table\"]}[Data]\nin\n ACCOUNT_Table";

Non-Quoted Strings: "let\n Source = Snowflake.Databases(DS_CONN,DW_CONN,[Role=DS_ROLE]),\n ANALYTICS_DB_Database =

Source{[Name=DB_CONN,Kind=\"Database\"]}[Data],\n INSIGHT_REPORT_Schema = ANALYTICS_DB_Database{[Name=DSCH_CONN,Kind=\

"Schema\"]}[Data],\n CUSTOMER_DIM_G_AGREEMENT_DIM_G_VW_View =

INSIGHT_REPORT_Schema{[Name=\"CUSTOMER_DIM_G_AGREEMENT_DIM_G_GBI_RGG_VW\",Kind=\"View\"]}[Data],\n #\"Removed Other Columns

\" = Table.SelectColumns(CUSTOMER_DIM_G_AGREEMENT_DIM_G_VW_View,{\"CustomerKey\", \"CustomerCd\", \"CustomerDesc\",

\"MasterAgreementDesc\"})\nin\n #\"Removed Other Columns\"

|

Query |

"let\n Source = Value.NativeQuery(Snowflake.Databases(\"hg51401.snowflakecomputing.com\",\"RESTAURANTS\"){[Name=\"FIVETRAN\

"]}[Data], \"select * from fivetran.restaurants_global_postsales.sc_new_monthly_churn\", null, [EnableFolding=true])\nin\n

Source";

Expression with Select keyword: "let\n Source = Value.NativeQuery(Snowflake.Databases(\"ppg-da01.snowflakecomputing.com\",\"SNOWFLAKE_POC_ETL_PRD_WH\",

[Role=\"DWHDEV04ALL\"]){[Name=\"DWHDEV04\"]}[Data], \"Select * From \"\"CURATED_PMC_SA\"\".\"\"D_CUSTOMER_VIEW\"\"\", null,

[EnableFolding=true]),\n FilterLogic = \n if \n NoOfRows = 0 \n then \n Source \n else \n

Table.FirstN(\n Source, \n 0\n )\nin\n FilterLogic"

Expression with multiple Select text in source string: "let\n Source = Value.NativeQuery(Snowflake.Databases(\"ppg-da01.snowflakecomputing.com\",\"SNOWFLAKE_POC_ETL_PRD_WH\",

[Role=“DWHUAT02_CURATED_PMC_SA_SELECT”]){[Name=\"DWHDEV04\"]}[Data], \"Select * From CURATED_PMC_SA.D_CALENDAR\", null,

[EnableFolding=true]),\n #\"Filtered Rows\" = Table.SelectRows(Source, each true)\nin\n #\"Filtered Rows\""

|

|

Google BigQuery |

Table |

Extraction from a Table: "let\n Source = GoogleBigQuery.Database(), #\"eng-scene-228201\" = Source{[Name=\"eng-scene-228201\"]}[Data],

HR_Data_Schema = #\"eng-scene-228201\"{[Name=\"HR_Data\",Kind=\"Schema\"]}[Data], HR_Recruiting_Table = HR_Data_Schema{

[Name=\"HR_Recruiting\",Kind=\"Table\"]}[Data]

in

HR_Recruiting_Table";

Extraction From a View: “let\n Source =

GoogleBigQuery.Database(), #\"test-alation-database-1\" = Source{[Name=\"test-alation-database-1\"]}[Data], profiling_Schema =

#\"test-alation-database-1\"{[Name=\"profiling\",Kind=\"Schema\"]}[Data],\n gbq_profile_View = profiling_Schema{[Name=

\"gbq_profile\",Kind=\"View\"]}[Data]\n

in\n gbq_profile_View”;

GBQ has default hostname - www.googleapis.com |

Query |

"let\n Source = Value.NativeQuery(GoogleBigQuery.Database(){[Name=\"test-alation-database-1\"]}[Data], \"select * from

`test-alation-database-1.CAPITALDATASET.columnprofiler`\", null, [EnableFolding=true])\nin\n Source";

|

|

Teradata |

Table |

"let\n Source = Teradata.Database(\"10.13.25.7\", [HierarchicalNavigation=true]),\n test_query_ingestion =

Source{[Schema=\"test_query_ingestion\"]}[Data],\n test2 = test_query_ingestion{[Name=\"test1\"]}[Data]\nin\n test2";

|

Query |

"let\n Source = Teradata.Database(\"10.13.25.7\", [HierarchicalNavigation=true, Query=\"SELECT * from test_query_ingestion.

test1 t1 left join test_query_ingestion.test2 t2 on 1=1\"])\nin\n Source";

|

|

Others (Supported from version 2.7.0) |

Table |

"let Source = #\"Odbc/dsn=dbricks_rap_dev\", SPARK_Database = Source{[Name=\"SPARK\",Kind=\"Database\"]}[Data], wheelq_Schema

= SPARK_Database{[Name=\"wheelq_json\",Kind=\"Schema\"]}[Data], d_channel_type_Table = wheelq_Schema{[Name=\"d_channel_type\",

Kind=\"Table\"]}[Data], #\"Changed Type\" = Table.TransformColumnTypes(d_channel_type_Table,{{\"dl_current\", type logical}}),

#\"Filtered Rows\" = Table.SelectRows(#\"Changed Type\", each [dl_current] = true), #\"Removed Other Columns\" =

Table.SelectColumns(#\"Filtered Rows\",{\"channel_type\", \"channel_typeId\"}) in #\"Removed Other Columns\""

"let Source = #\"SQL/VDB02TST;keh_dw\", dw_D_vakuutusasiakas = Source{[Schema=\"dw\",Item=\"D_vakuutusasiakas\"]}[Data],

#\"Filtered Rows\" = Table.SelectRows(dw_D_vakuutusasiakas, each ([Voimassa] = \"K\")), #\"Filtered Rows1\" =

Table.SelectRows(#\"Filtered Rows\", each [Poistettu_lahteesta] <> \"K\"), #\"Removed Columns\" =

Table.RemoveColumns(#\"Filtered Rows1\",{\"Voimassa_alkaen\", \"Voimassa_paattyy\", \"Lisaaja\", \"Muuttaja\",

\"Lisayshetki\", \"Poistettu_lahteesta\", \"Rivin_muuttaja_lahde\", \"Rivin_muutoshetki_lahde\", \"fk_vastuualue_lahde\",

\"Asiakas_tunniste_lahde\"}), #\"Replaced Value\" = Table.ReplaceValue(#\"Removed Columns\",null,\"Luokittelematon\",

Replacer.ReplaceValue,{\"Asiakkuussegmentti_nimi\"}) in #\"Replaced Value\""

"let Source = Databricks.Catalogs(\"adb-1398866281305367.7.azuredatabricks.net\", \"/sql/1.0/warehouses/ccd54c7b10fca7e1\",

[Catalog = null, Database = null, EnableAutomaticProxyDiscovery = \"enabled\"]), Navigation = Source{[Name = \"hub_qas\",

Kind = \"Database\"]}[Data], #\"Navigation 1\" = Navigation{[Name = \"g_mms\", Kind = \"Schema\"]}[Data],

#\"Navigation 2\" = #\"Navigation 1\"{[Name = \"v_account_bd_sfdc\", Kind = \"View\"]}[Data] in #\"Navigation 2\""

"let\n ソース = Snowflake.Databases(\"ok83915.ap-northeast-1.aws.snowflakecomputing.com\",\"VERIFICATION_WH\"),\n

ALATION_DEMO_Database = ソース{[Name=\"ALATION_DEMO\",Kind=\"Database\"]}[Data],\n

PUBLIC_Schema = ALATION_DEMO_Database{[Name=\"PUBLIC\",Kind=\"Schema\"]}[Data],\n

ORDERS_Table = PUBLIC_Schema{[Name=\"ORDERS\",Kind=\"Table\"]}[Data]\nin\n ORDERS_Table"

"let\n Source = #\"SQL/Damac-datawarehouse-dev sql azuresynapse net;synapse_dwh_dev\",\n

dwh_W_CASE_TASKS_F_vw = Source{[Schema=\"legal_dwh\",Item=\"W_CASE_TASKS_F_fusionvw\"]}[Data]\nin\n dwh_W_CASE_TASKS_F_vw"

"let\n Source = Vertica.Database(\"10.13.59.99\", \"alation\", []),\n

test_profiling_main_Schema = Source{[Name=\"test_profiling_main\",Kind=\"Schema\"]}[Data],\n

stpsale_Table = test_profiling_main_Schema{[Name=\"stpsale\",Kind=\"Table\"]}[Data]\nin\n stpsale_Table"

ORDERS_Table = PUBLIC_Schema{[Name=\"ORDERS\",Kind=\"Table\"]}[Data]\nin\n ORDERS_Table"

|

Query |

"let\n Source = Value.NativeQuery(GoogleBigQuery.Database([BillingProject=\"k-tps1-datalake-prod\"])

{[Name=\"k-tps1-datalake-prod\"]}[Data], \"--<Type>Native</Type>#(lf)--<Dataset>INS_BCM_DT_GroupCashPosition</Dataset>#(lf)

--<Queryname>calendar_dp</Queryname>#(lf)with sel as (SELECT #(lf)Date(calendar.date ) as date,#(lf)Date(calendar.date ) as

date_time,#(lf)year,#(lf)quarter,#(lf)case when calendar.month = 1 then \"\"01\"\"#(lf)when calendar.month = 2 then

\"\"02\"\"#(lf)when calendar.month = 3 then \"\"03\"\"#(lf)when calendar.month = 4 then \"\"04\"\"#(lf)when calendar.month = 5

then \"\"05\"\"#(lf)when calendar.month = 6 then \"\"06\"\"#(lf)when calendar.month = 7 then \"\"07\"\"#(lf)when

calendar.month = 8 then \"\"08\"\"#(lf)when calendar.month = 9 then \"\"09\"\"#(lf)else CAST (month AS string) end as months

,#(lf)weekday,#(lf)last_day(calendar.date) as End_of_month,#(lf)CONCAT(CONCAT(CAST(month_shortdesc AS STRING), \"\" \"\"),

year) as Month_and_year,#(lf)month_shortdesc,#(lf)day_in_month#(lf)#(lf) FROM `glb-calendar-prod.calendar.calendar` as

calendar#(lf) where#(lf) calendar_label = \"\"Gregorian\"\"#(lf)and (year >= 2019) )#(lf)#(lf)#(lf)select

sel.*,#(lf)concat(year , months) as Year_month,#(lf)#(lf) from sel\", null, [EnableFolding=true])\nin\n Source"

"let\n Source = Sql.Database(\"adfmartdbprd.icwgrp.com\", \"ADF_Mart\", [Query=\"IF (SELECT OBJECT_ID('tempdb..#Temp')) is

not null DROP TABLE #Temp#(lf)select distinct z.County_Nm#(lf) ,z.State_Code #(lf)#(tab) ,z.City_Nm#(lf)#(tab) ,

max(z.Longitude_Addr) as 'Longitude_Addr'#(lf)#(tab) ,max(z.Latitude_Addr) as 'Latitude_Addr'#(lf)#(tab) --,

z.zip_code#(lf)#(lf)into #Temp#(lf)#(tab) #(lf)from ADF_Mart.dbo.Dim_Zipcode z #(lf)where z.ADF_Active_Flag = 'Y' and

z.County_Nm is not null#(lf)and z.county_nm in ('ALAMEDA','CHESTER','DUPAGE','CLARK','LOS ANGELES','SACRAMENTO','SAN DIEGO',

'SEMINOLE','TRAVIS')#(lf)and z.City_Nm in ('PLEASANTON','WEST CHESTER','LISLE','LAS VEGAS','SACRAMENTO','SAN DIEGO',

'MID FLORIDA','AUSTIN','SANTA CLARITA','WOODLANDHILLS') --Need to add 'LA COUNTY'#(lf)--and z.state_code in ('CA','PA','IL',

'NV','OK','TX')#(lf)group by z.County_Nm,z.State_Code,z.City_Nm--,z.zip_code#(lf)order by z.county_nm#(lf)#(lf)select *

from #Temp\"]),\n #\"Capitalized Each Word\" = Table.TransformColumns(Source,{{\"County_Nm\", Text.Proper, type text}}),\n

#\"Changed Type\" = Table.TransformColumnTypes(#\"Capitalized Each Word\",{{\"County_Nm\", type text}})\nin\n

#\"Changed Type\""

|

Parameterised Expressions Supported by Lineage¶

Azure Power BI Scanner connector supports the parameterized expressions mentioned in the below table for Lineage:

Note

All parameterized expressions in the table below work with all supported data sources mentioned in Expressions Supported by Lineage.

Types of Parameterized Expressions |

Example Parameters used in Dataset Expression |

|---|---|

Name of the parameterized expression Example: BQEnv |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=BQEnv]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression preceding with # Example: #”BQEnv” |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=#"BQEnv"]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression used with skip character & Example: “”&BQEnv&”” |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=""&BQEnv&""]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression used with skip character & and preceding with @ (Supported from connector version 2.5.0) Example: “”&@BQEnv&”” |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=""&@BQEnv&""]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression used with skip character & and @ with n number of spaces between @ and parameter variable (Supported from connector version 2.6.0) Example: “”&@ BQEnv&”” |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=""&@ BQEnv&""]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression preceding with @ (Supported from connector version 2.6.0) Example: @BQEnv |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=@BQEnv]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Parameterized expression used with skip character & with n number of spaces between the skip character and parameter variable (Supported from connector version 2.6.0) Example: “”& BQEnv &”” |

let

Source = GoogleBigQuery.Database(),

#"test-alation-database-1" = Source{[Name=""& BQEnv &""]}[Data],

PowerBiSchema_Schema = #"test-alation-database-1"{[Name="PowerBiSchema",Kind="Schema"]}[Data],

joinedTable_Table = PowerBiSchema_Schema{[Name="joinedTable",Kind="Table"]}[Data]

in

joinedTable_Table

|

Scenarios Supported by the Connector for Parameter Usage¶

The connector is expected to accurately parse the parameters in critical parts of expressions and establish lineage. The critical parts of expressions are the host, database, schema, table, query, and any renamed columns. Parameters placed in these parts of an expression are essential for extracting the connection information required for lineage tracing. Parameters situated outside of these parts of an expression are less likely to impact the accuracy of the lineage.

Direct Usage¶

Parameters can act as placeholders for different variables in a dataset expression.

For example, the expression below includes placeholder parameters HostParameter, DatabaseParameter, SchemaParameter, and TableParameter. The connector is expected to parse such parameters and extract the necessary connection information for lineage.

"let\n Source = MySQL.Database(HostParameter, #\"DatabaseParameter\",

[ReturnSingleDatabase=true]),\n employees_departments =

Source{[Schema=\"\"&SchemaParameter&\"\",Item=TableParameter]}[Data]\nin\n

employees_departments";

Usage of the Ampersand Operator¶

The ampersand operator & may be used in expressions to concatenate text within hostnames or queries. In the example below, the ampersand operator is used to concatenate parts of a hostname and a query. The connector is expected to parse such expressions accurately.

"let\n Source = Value.NativeQuery(Snowflake.Databases(\"hg51401.\"

& hostParameter & \".com\",\"RESTAURANTS\"){[Name=\"FIVETRAN\"]}[Data],

\"select * from \" & SchemaNameParameter & \".restaurants_global_postsales.sc_new_monthly_churn\",

null, [EnableFolding=true])\nin\n Source";

Note

Using the ampersand operator at the beginning or end of a hostname or query may result in incorrect parsing by the connector, potentially causing incomplete lineage.

Column-Level Lineage Expressions¶

Datasets¶

"let\n Source = Sql.Databases(\"ifac-sqlsrv.ceeyrlqdpprr.us-west-2.rds.amazonaws.com:1433\"),\n

Sales = Source{[Name=\"Sales\"]}[Data],\n

dbo_Orders_Details = Sales{[Schema=\"dbo\",Item=\"Orders_Details\"]}[Data],\n

#\"Renamed Columns\" = Table.RenameColumns(dbo_Orders_Details,{{\"Discount\",

\"Discount_field_of_dataset\"}, {\"OrderID\", \"OrderID_field_of_dataset\"},

{\"ProductID\", \"ProductID_field_of_dataset\"}})\nin\n #\"Renamed Columns\"";

"let\r\n Source = Sql.Database(#\"Warehouse server\", #\"Warehouse name\"),\r\n

Warehouse_Date = Source{[Schema=\"Warehouse\",Item=\"Date\"]}[Data],\r\n

#\"Renamed Columns\" = Table.RenameColumns(#\"Removed Other Columns\",

{{\"Calendar YWD Week Of Year_Caption_1033\", \"YWD Week Of Year\"},

{\"Calendar YWD Week_Caption_1033\", \"YWD Week - Year\"},

{\"Calendar YWD Year_Caption_1033\", \"YWD Year\"}, {\"Calendar YQMD Month Of Year_Caption_1033\",

\"YQMD Month Of Year\"}, {\"Calendar YQMD Month_Caption_1033\",

\"YQMD Month - Year\"}, {\"Calendar YQMD Quarter Of Year_Caption_1033\",

\"YQMD Quarter Of Year\"}})\r\nin\r\n #\"Renamed Columns\"";

"let\r\n Source = Cds.Entities(#\"Dynamics 365 URL\", [ReorderColumns=null,

UseFormattedValue=null]),\r\n entities = Source{[Group=\"entities\"]}[Data],\r\n

msfp_questions = entities{[EntitySetName=\"msfp_questions\"]}[Data],\r\n

#\"Renamed Columns\" = Table.RenameColumns(msfp_questions,{\r\n{\"createdby\",

\"Created By\"},\r\n{\"createdon\",\"Created On\"}, \r\n{\"createdonbehalfby\",\"Created By

(Delegate)\"},\r\n{\"importsequencenumber\",\"Import Sequence Number\"},\r\n{\"modifiedby\",

\"Modified By\"},\r\n{\"modifiedon\",\"Modified On\"},\r\n{\"modifiedonbehalfby\",

\"Modified By (Delegate)\"}\r\n})\r\nin\r\n #\"Renamed Columns\""

"let\n Source = OData.Feed(Company1, null, [Implementation=\"2.0\"]),\n

PBICloseIncStmtGLEntries = Source[PBIClosingGLEntries],\n

#\"Renamed Columns\" = Table.RenameColumns(PBICloseIncStmtGLEntries,\n

{{\"G_L_Account_No\", \"G/L Account No.\"}}),

#\"Changed Type\" = Table.TransformColumnTypes(#\"Renamed Columns\",

{{\"Posting_Date\", type date}}),\n

#\"Renamed Columns1\" = Table.RenameColumns(#\"Changed Type\",

{{\"Posting_Date\", \"Posting Date\"}, {\"Amount\", \"Amt.\"}}),\n

#\"Renamed Columns2\" = Table.RenameColumns(#\"Changed Type2\",

{{\"SmallIconUri\", \"Thumbnail\"}, {\"Description\",

\"Description Full\"}, {\"ReleaseDate\", \"Release Date\"}, {\"AppVersion\",

\"Version\"}, {\"NumberOfRatings\", \"# of Ratings\"}, {\"AverageRating\",

\"Average Rating\"}, {\"Tags\", \"Certified\"},

{\"ShortDescription\", \"Description\"}})\nin\n #\"Changed Type\""

Dataflows¶

"section Section1;\r\nshared Query = let\r\n Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText(\"i45WMlTSUTIyVorViVYyBjJNzZRiYwE=\",

BinaryEncoding.Base64), Compression.Deflate)),

let _t = ((type nullable text) meta [Serialized.Text = true]) in type table

[#\"test 1\" = _t, #\"test 2\" = _t]),

\r\n #\"Changed column type\" = Table.TransformColumnTypes(Source, {{\"test 1\", Int64.Type},

{\"test 2\", Int64.Type}}),\r\n #\"Renamed columns\" = Table.RenameColumns(#\"Changed column type\",

{{\"test 2\", \"test 4\"}})\r\nin\r\n

#\"Renamed columns\";\r\nshared #\"Query (2)\" = let\r\n

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText(\"i45WMlTSUTICYmOlWJ1oMMsEiM2UYmMB\",

BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true])

in type table [Column1 = _t, Column2 = _t, Column3 = _t]),

\r\n #\"Renamed columns\" = Table.RenameColumns(#\"Changed column type\",

{{\"Column3\", \"Column34\"}, {\"Column2\", \"Column23\"}})\r\nin\r\n

#\"Renamed columns\";\r\nshared Table = let\r\n

Source = Table.FromRows(Json.Document(Binary.Decompress(Binary.FromText(\"i45WMlTSUTICYmOl2FgA\",

BinaryEncoding.Base64), Compression.Deflate)), let _t = ((type nullable text) meta [Serialized.Text = true])

in type table [#\"A 1 \" = _t, #\"A 2\" = _t, A3 = _t]),

\r\n #\"Changed column type\" = Table.TransformColumnTypes(Source, {{\"A 1 \", Int64.Type}, {\"A 2\",

Int64.Type}, {\"A3\", Int64.Type}}),\r\n

#\"Renamed columns\" = Table.RenameColumns(#\"Changed column type\",

{{\"A 1 \", \"B1\"}, {\"A 2\", \"B2\"}, {\"A3\", \"B3\"}})\r\nin\r\n #\"Renamed columns\";\r\n";

Lineage Limitations¶

Lineage¶

You must upgrade to Alation version 2023.3.2 or higher to be able to generate cross-system lineage from Databricks Unity Catalog with connector version 2.1.0 or higher.

To use lineage with UC Databricks in Alation version 2023.3.2, enable the flag

alation.resolution.DEV_bi_connector_returns_db_for_two_part_schema. No flag is required from Alation version 2023.3.3 onwards for cross-system lineage to be generated between Databricks Unity Catalog and Power BI.Lineage for paginated reports (RDL) is not supported. Paginated reports do not contain the dataset ID required to show lineage between a Power BI dataset and a Power BI paginated report.

Lineage between data source tables and datasets is built through parsing dataset expressions which are Power BI M Queries (Power Queries). Alation gets these expressions as a response from the getScanResult API. See Expressions Supported by Lineage for examples of supported expressions.

Power BI Scanner API doesn’t return datasets with object-level security. As a result, Alation will not show connections, fields, and lineage for such datasets.

Limitations Specific to Column-Level Lineage¶

Power BI column-level lineage is not supported for the following objects:

Power BI dashboards

Column-level lineage is not shown between a report and a dashboard or a tile and a dashboard

Power BI tiles

Column-level lineage is not shown between an upstream source and a tile

Power BI report measures

If a data source column name is modified in Power BI in a dataset or a dataflow, then column-level lineage is supported only if Alation gets the renamed column information in one of the supported formats as part of the dataset expression in the scanner API response.

Note

From Power BI Scanner OCF connector version 2.2.1, the connector supports the parsing of queries that contain table names with periods (.) in them. The table names must be enclosed in quotes to ensure correct parsing, for example:

SELECT a.name, a.id FROM schema.\"Table.name\" a